Chatbot Creation

Directly Integrate With Your Backend

If you have already built your custom model, such as a private model where you prefer not to send your data for training, a NSFW model that cannot be processed by the OpenAI API in the RAG workflow, or an OpenAI Assistant API that you have constructed and are satisfied with its performance, you can directly integrate this backend endpoint with our Chat Data Integration flow.

Custom Backend Endpoint

What You Should Know

By selecting the custom-model, you have the flexibility to integrate your own backend model to power the chatbot. This option doesn't utilize our OpenAI tokens, incurring only the costs associated with conversations and daily metrics storage. The charge for each chat is a mere 0.1 message credit. Your backend URL must be capable of accepting POST requests with 'messages' and 'stream' parameters as inputs. Below is an example of the request format that will be sent:

{

"messages": [...], // List of messages in the conversation

"temperature": 0.0,

"stream": true // Boolean parameter indicating if the conversation is part of a continuous stream

}

Ensure that your custom model handles these inputs to seamlessly integrate with our chatbot infrastructure. The expected response from your backend should be in plain text format (text/plain).

This emphasizes that the expected response from the backend should be in plain text format.

You can test your endpoint by this request

curl -X POST ${endpoint} -H "Authorization: Bearer ${bearer}" -H "Content-Type:application/json" -d '{"stream": false,"temperature": 0, "messages": [...]}'

Example Use Case

We demonstrate the use of the OpenAI Assistant API as an exemplary case, illustrating the process of setting up the API backend with minimal coding for integration into the Chat Data workflow.

Github Repo: https://github.com/chat-data-llc/ai-assistant-chat-data-integration

Introduction Of OpenAI assistant API

OpenAI's Assistants API empowers developers to create sophisticated AI assistants tailored to their specific application needs. However, once you've developed your assistant, integrating it into various platforms and constructing a frontend infrastructure can be a daunting task. This process includes challenges such as embedding your assistant into websites, and platforms like Slack, Discord, and WhatsApp, not to mention the need for implementing chat history logging, rate limiting, and other protective measures.

To streamline this integration process, the ai-assistant-chat-data-integration repository presents a low-code solution that enables you to deploy your existing Assistant API across multiple platforms with minimal effort. With this approach, you can achieve integration within just 20 minutes, saving valuable time and resources. This solution is designed to help developers swiftly extend the reach of their AI assistants, making them accessible across the web and popular communication platforms without the hassle of building custom integrations from scratch.

Integration process

Step 1: Construct and Deploy the Endpoint

Adhere to the instructions in the ai-assistant-chat-data-integration repository to construct and deploy your endpoint for production.

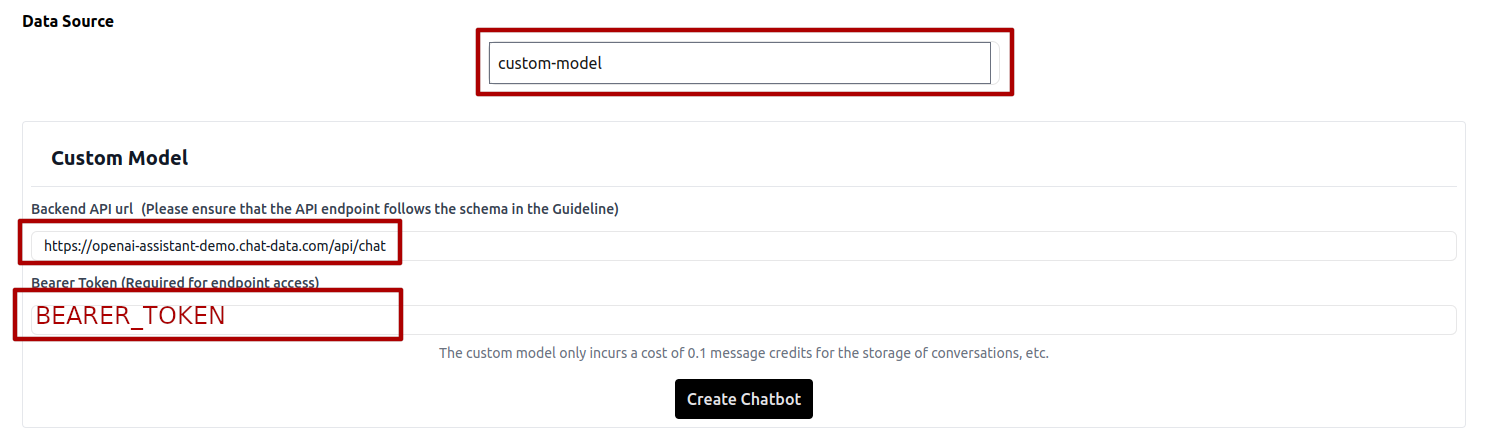

Step 2: Incorporate Your Custom Endpoint

Make sure custom-model is chosen as the data source and https://${your endpoint} is entered as the Backend API URL.

Step 3: Create Chatbot

Press the Create Chatbot button on the page to generate your chatbot.