Chatbot Training Guide

Chatbot Basic Configurations

If you train the chatbot using your own data, there are several fundamental settings to achieve a high-performing chatbot that can be embedded in your website. This setup will ensure the chatbot meets your expectations.

Configuring the Chatbot

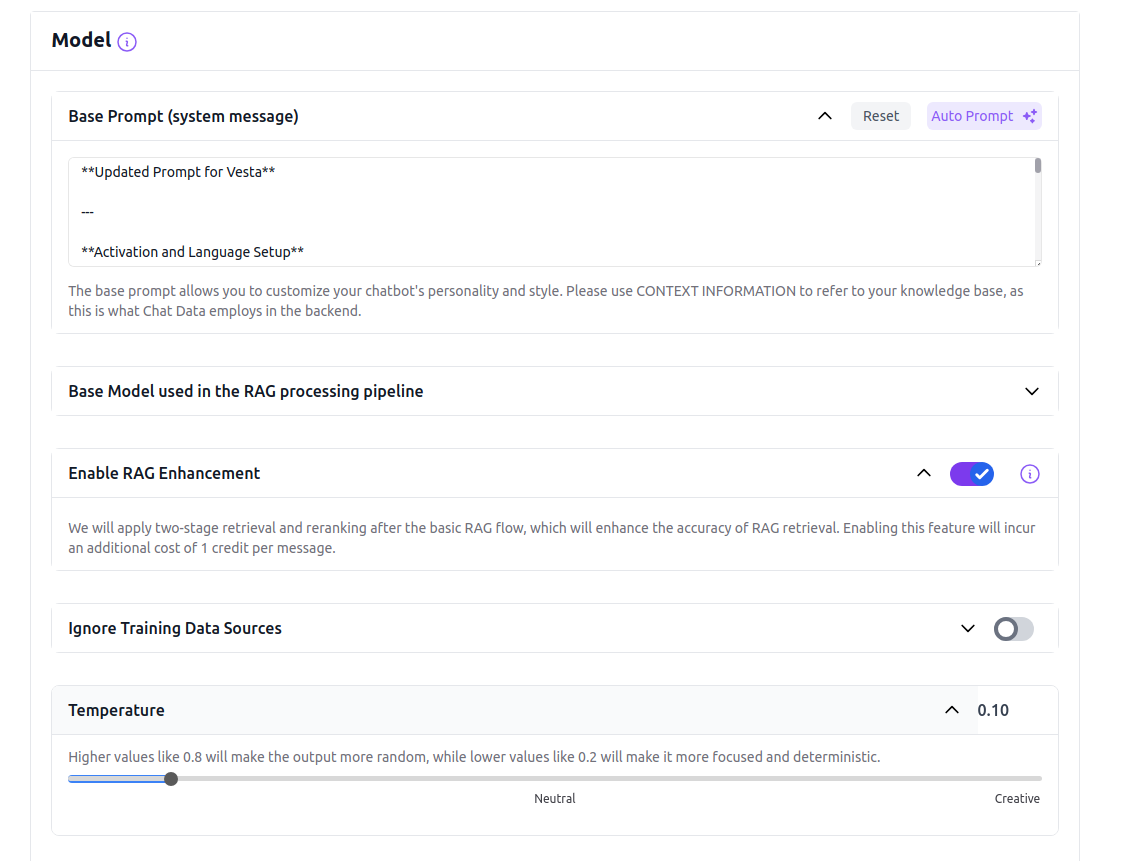

To achieve the desired performance from your chatbot, it's crucial to configure it appropriately. The three essential parameters to set up for your new chatbot are base prompt, OpenAI model, and temperature.

These parameters can be adjusted on the Model Settings page of your chatbot. Access this page via the URL formatted as https://www.chat-data.com/chatbot/{chatbotId}/settings/model.

Base Prompt

This is the most important parameter among the three. If you have the option to configure only one aspect of your chatbot, prioritize setting the base prompt. It sets the overarching tone and communicates your expectations to the chatbot. Without a properly configured base prompt, your chatbot will revert to the default setting, potentially leading to responses that are unexpected or off-target. In the base prompt, includeCONTEXT INFORMATION to represent the uploaded data if you are using the custom-data-upload backend, as this is what we employ in our backend.

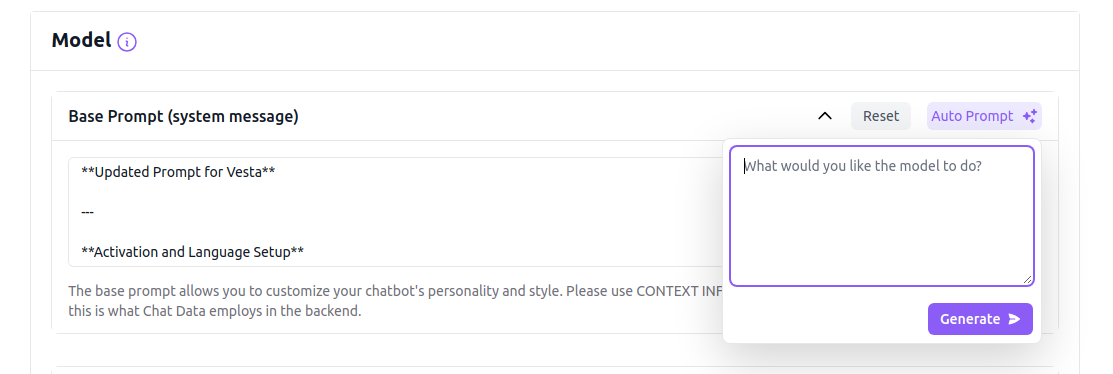

Auto Prompt

The auto prompt feature offers a quick way to generate or refine system prompts tailored to your specific needs. Whether you're creating a new prompt from scratch or looking to enhance an existing one, this tool helps create clear, well-structured prompts that are optimized for AI comprehension. This feature is available at no credit cost, though it is limited to 10 requests per hour to ensure system stability.

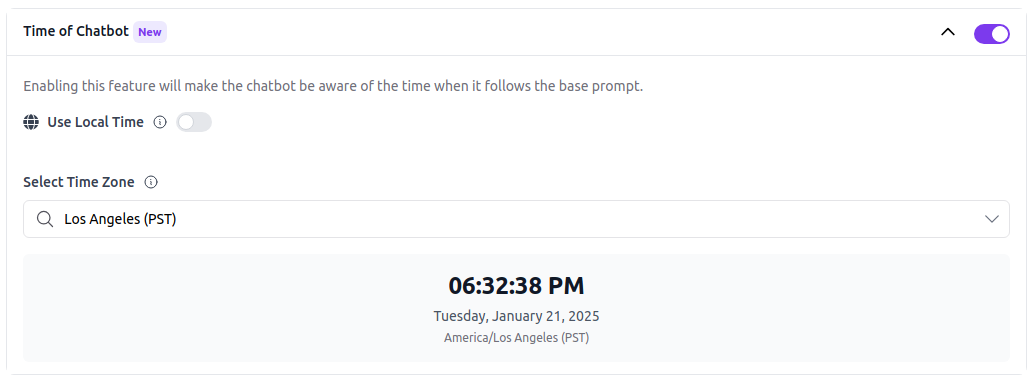

Chatbot Time

By default, the chatbot is unaware of the current time. Therefore, you should enable the time configuration for the chatbot if you want the chatbot to respond to queries based on the current time. You can set the chatbot's time to a fixed time zone of your choice. The chatbot will then respond to queries using the base prompt based on the current time in the selected time zone. If you prefer the chatbot's time to depend on the user's browser time, you can enable the User Local Time toggle. The chatbot will then follow the time rule mentioned in the base prompt based on the user's browser time.

GPT-4o mini

For the GPT-4o mini model, keep the base prompt straightforward. Complex logic might be overlooked due to the model's limitations. Utilize the few-shot prompting technique to tailor your base prompt specifically for the GPT-4o mini model, which benefits from multiple examples to enhance learning.

GPT-4.0

The GPT-4.0 model accommodates more intricate logic, allowing for sophisticated interactions.

Consider these four strategies when setting up your base prompt:

Adjust the bot's personality

If you desire a more laid-back and amiable demeanor for your bot, you can experiment with wording such as: "Present yourself as a friendly and easygoing AI Assistant."

Modify how the bot handles unknown queries

Instead of responding with a generic "Hmm, I am not sure," consider instructing the bot to say something like, "I apologize, but I don't possess the information you seek; kindly reach out to our customer support."

Direct its focus towards specific topics

If you envision your bot as a specialist in a particular field, you can incorporate instructions like, "Specialize in providing information about environmental sustainability as an AI Assistant."

Establish its limitations

If you wish to restrict the bot from offering certain types of information, you can specify, "Avoid providing financial advice or information."

OpenAI Model

You have the option to select either the GPT-4o mini or the GPT-4.0 model for processing the uploaded data if you opt for the custom-data-upload, medical-chat-human or medical-chat-vet backends. The GPT-4.0 is much better at following the base prompt and not hallucinating, but it's slower and more expensive than the GPT-4o mini. If accuracy is critical, especially in reflecting the uploaded data in responses, upgrading to the Standard plan and selecting the GPT-4.0 model is advisable.

RAG Enhancement

Our system employs Cohere reranker models to optimize the retrieval process by reranking the top 1,000 text chunks for each query. After initially retrieving text chunks based on embedding vector cosine similarity, the reranker further refines the results to ensure maximum relevance. We highly recommend enabling this feature, as precise text chunk retrieval is fundamental to creating a highly accurate chatbot. In fact, a chatbot utilizing the reranker feature combined with the GPT-4o mini model can outperform a standalone GPT-4.0 model without reranking capabilities.

Temperature

The temperature setting affects the chatbot’s response style, determining whether it is more deterministic or creative. For high accuracy, particularly when the chatbot must adhere closely to the uploaded data, it is recommended to keep the temperature at 0. This setting prevents the chatbot from deviating too far from the provided information with creative interpolations.

Ignore Your Uploaded Data

To create a generic chatbot that relies solely on the GPT-4o mini or the GPT-4.0 models, enable the Ignore Uploaded Data Source option. Activating this option ensures that the chatbot operates using only the selected base model (GPT-4o mini or GPT-4.0) to generate responses based following your base prompt.

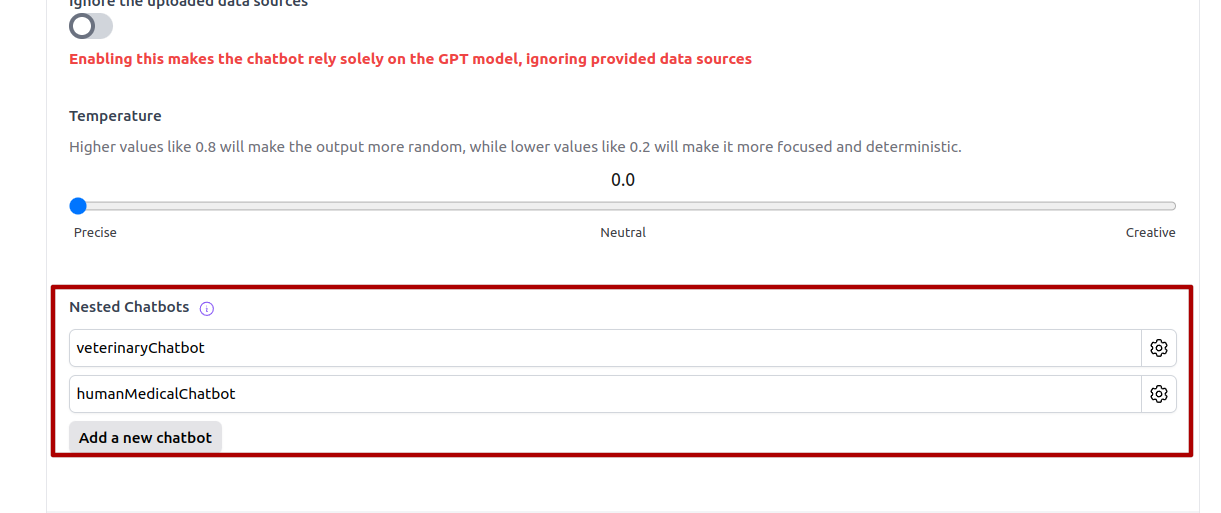

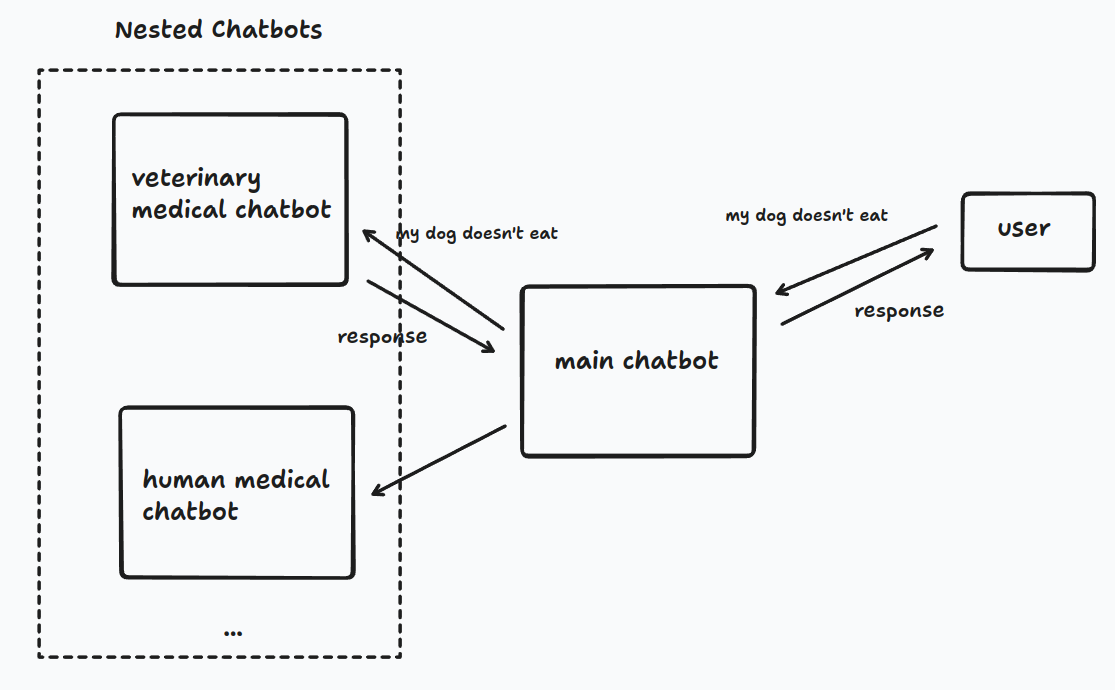

Nested Chatbots

Sometimes, a single chatbot cannot achieve your goal for various reasons. For example, you may want to upload your own data to train a chatbot that reflects your company database. However, you might also want to take advantage of our medical-chat models or other chatbots you have created but cannot fit into your existing chatbot due to character capacity limits. In this situation, you can use nested chatbots so that your first-layer chatbot can translate queries to nested chatbots for further questions. Here is the flow to demonstrate the whole process:

The nested chatbots can be any chatbots created in Chat Data and can have their own nested chatbots as well. There are several parameters that you need to fill in:

- Chatbot Id: The ID of the chatbot you want to depend on.

- Function Name: A semantically understandable function name for the chatbot.

- Description: A detailed description of the chatbot's capability and its use.

- API Key: You can create or find the API key on the account page that owns the chatbot.

You can set multiple nested chatbots in the https://www.chat-data.com/chatbot/{chatbotId}/settings/model page as following