Chatbot Training Guide

Train Chatbots With Clean Data

The data you provide is utilized as the CONTEXT INFORMATION for the chatbot to respond to queries. The cleaner the CONTEXT INFORMATION, the higher the likelihood that the chatbot will discern the essential messages within the context to accurately address the query. This is especially critical when using GPT-4o mini as the underlying model.

Sources of Polluted Data

Polluted data can occur during the extraction of text content from PDF files or through website crawling for various purposes:

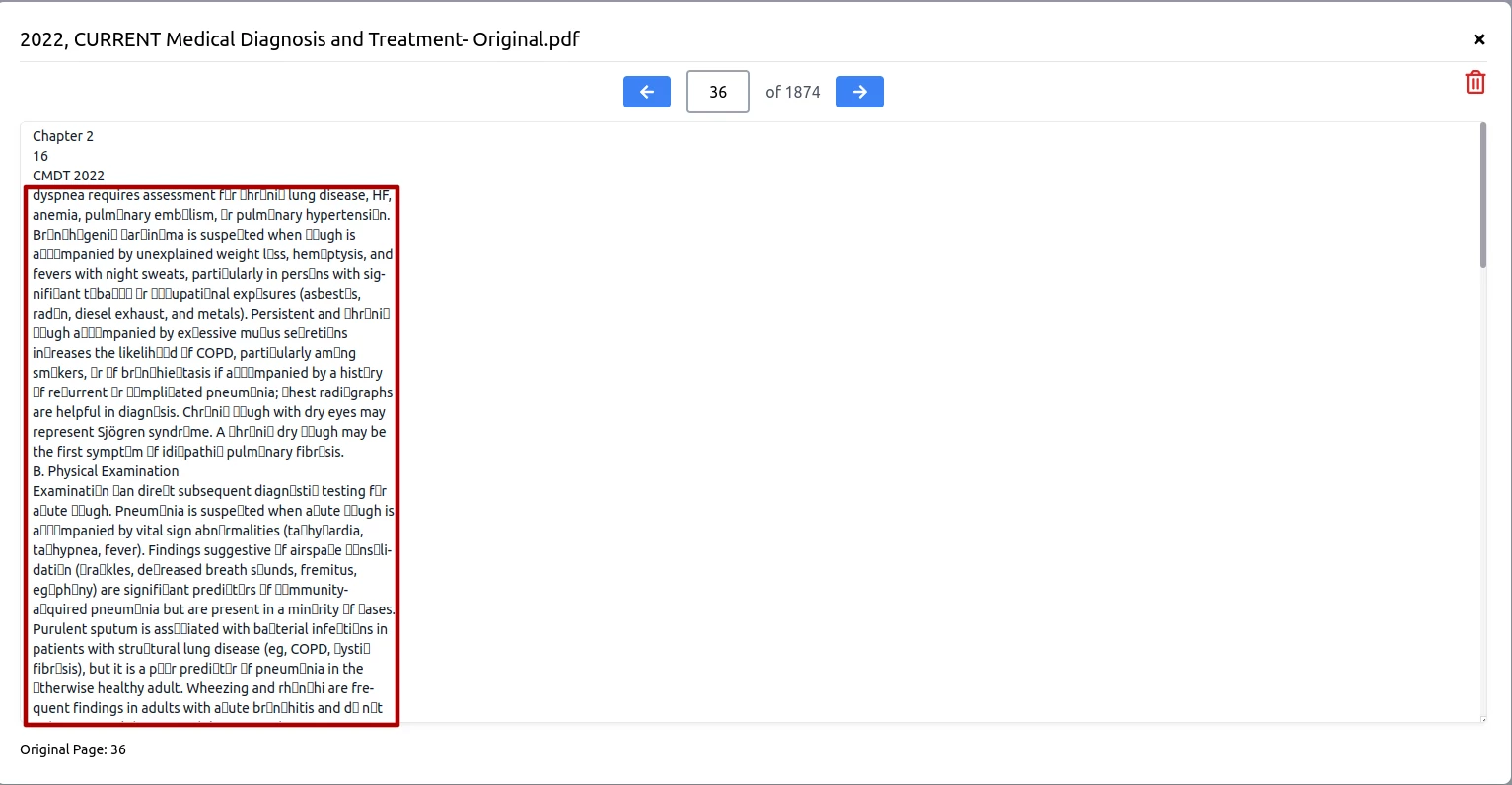

Unrecognized Unicode

Some PDF texts use specialized Unicode encoding as a copy-protection measure. Consequently, extracting text from these PDF files may result in "garbled code," which appears unreadable and incomprehensible. This garbled code, when used as context information for a chatbot, may hinder its performance. To ensure the integrity of the extracted text, it is advisable to preview the content by clicking the eye icon to check for any instances of garbled code. Below is an example of garbled code from a PDF file.

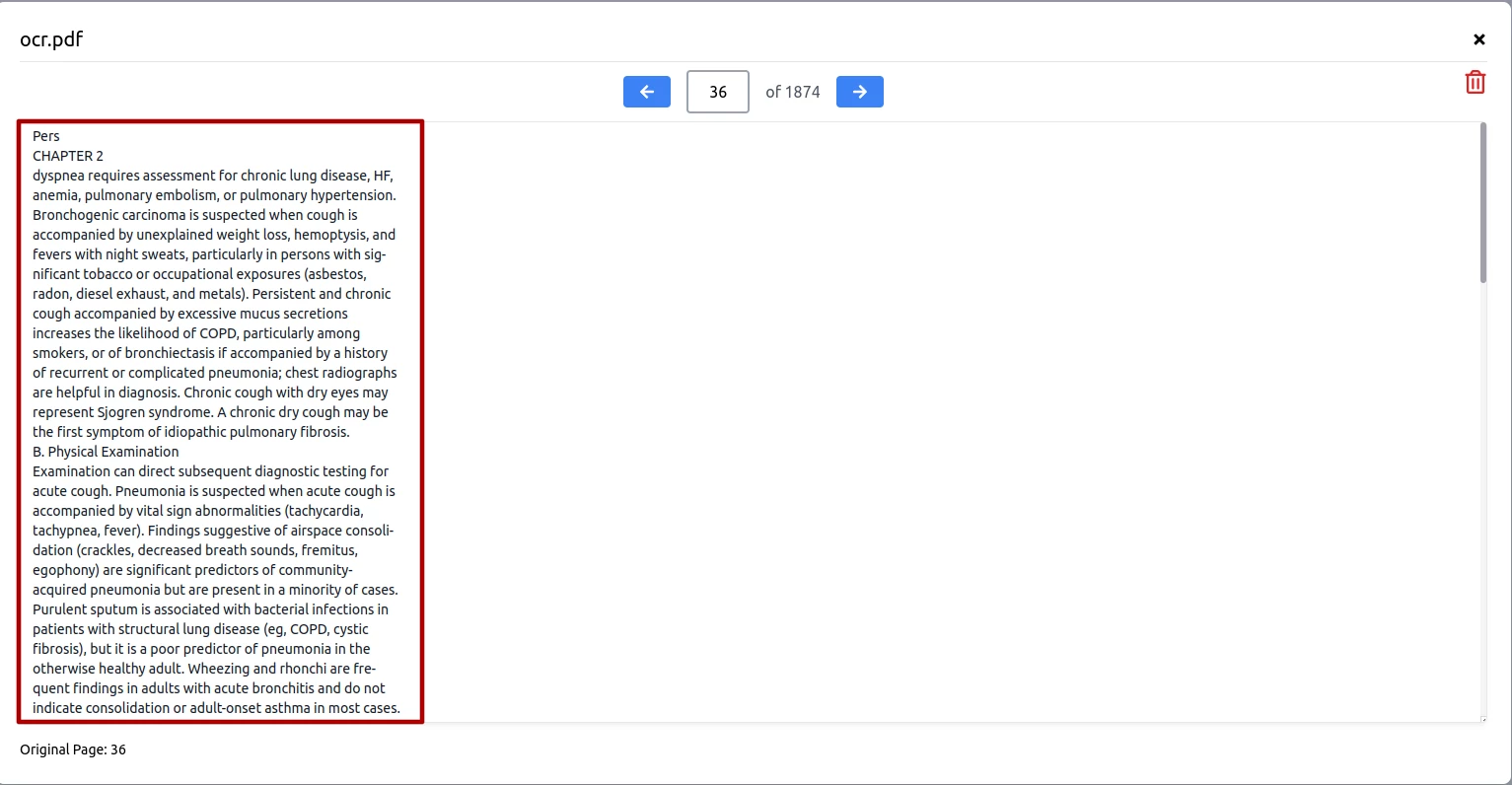

The solution to fix the 'garbled code' issue is to use OCR packages to convert the PDF file to another PDF file with the normal encoding. For example you can use the following command in ubuntu

ocrmypdf --force-ocr input.pdf output.pdf

After converting the PDF file with normal encoding, you can upload the file again. The unreadable text will be readable.

Redundant Metadata

Both PDF files and websites often contain extensive metadata that does not provide valuable information but occupies significant text space. This metadata is superfluous and consumes space that could be better used for relevant content. Removing this metadata can help streamline the data, although it typically requires considerable manual effort. This is a key reason why we recommend that Shopify/Woocommerce store owners import product details directly rather than scraping websites, as metadata can clutter the data with irrelevant information.

To address this issue, it is advisable to manually condense the useful information and then upload the consolidated content into the database.